A Team of 1

Team management is a big complicated process that we have entire dedicated team members for in the game industry. Producers are important. One of the hardest things about really doing a project beginning to the end just with yourself; code, art, design, and don’t forget production – is really that last part.

Most of the tools and techniques out there for product management are built around teams, because generally you have multiple people working together and trying to meet each others needs. When you try to do it all yourself you are your own blocker at all times, and if you try to produce yourself in the normal manner, with conventional tools, sure it will work, but you have created quite the waterfall nightmare.

Again, let me stress, a lot of the point in conventional production pipelines is for communication between people. Yet if the only person you need to communicate with is yourself, inline may be the best way. Right where you are going to look.

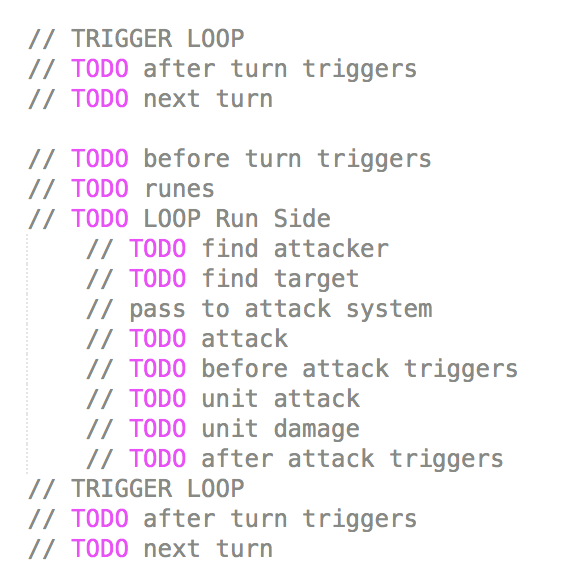

As I went to rewrite my combat loop, and after I had blocked out enough of my Models to start working the first thing I did was write my TODO list. Not in Jira, not in a spreadsheet, but right there, before I even started trying to write the code. What winds up happening here (even though new TODOs show up as you work, and concepts Unpack into more complex steps) is these TODOs get replaced and moved as needed into actual code.

As I look at this I start to imagine if I was working with other people, and using some kind of ticket system each of these TODOs should have a ticket associated with it, and suddenly you have an extension of existing best practices if you ever scale your team above 1. No more mystery TODOs in code, there is an external point of contact. Perhaps one extra nice to have on these might be a version number to indicate when they probably should be done by.

For actual assets I probably will fall back to a spreadsheet, but I’ll probably be doing a lot of placeholder work, and so for each of those assets in Unity I’ll set a “Label” (not to be confused with Tags even though it uses a tag icon, lower right.) It might even make sense to put version/release numbers here.

In theory by Labeling assets like this even in a team environment you can help indicate how assets should be treated, and leave less mystery around them down the road.

Any way, back to my combat loop. I’m down to needing to send actual attack events again. Which I’m planning to send directly to the object attacking, then out to the trigger system (pre attack,) then pass to the object defending, and again out to the trigger system (post attack.)

So You Want to Make a CCG

Finding myself between jobs I looked to a recurring problem of portfolio work as a TA. I can point to projects I’ve worked on, and describe what I’ve done but that all gets very ambiguous. So much lives on the backend that I don’t currently have access to so. It’s also been years since I’ve worked on a project where I got to really leverage some of my skills in a demonstrable fashion. I started tooling around with making generic tech that I’ve had in mind for a while and came to the conclusion; let’s make a CCG because that’s not hard (eheheh… sigh)

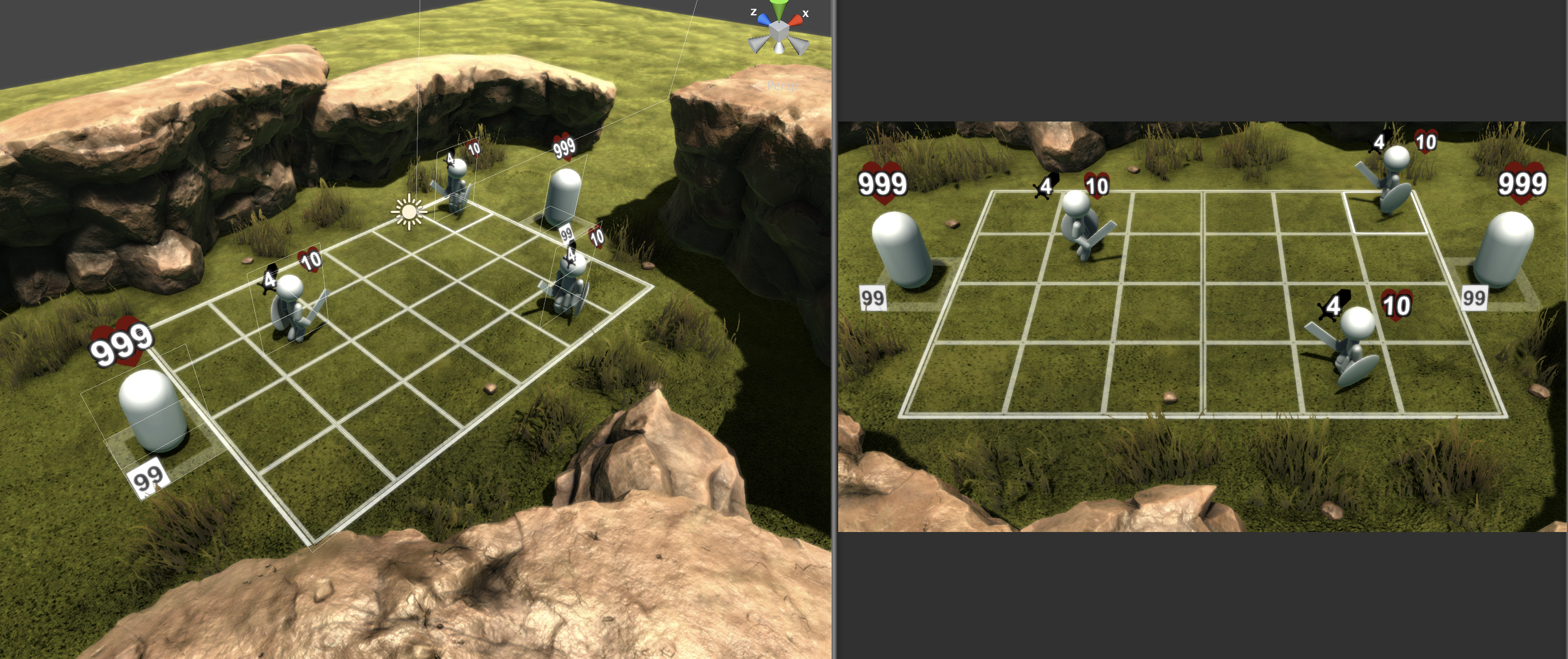

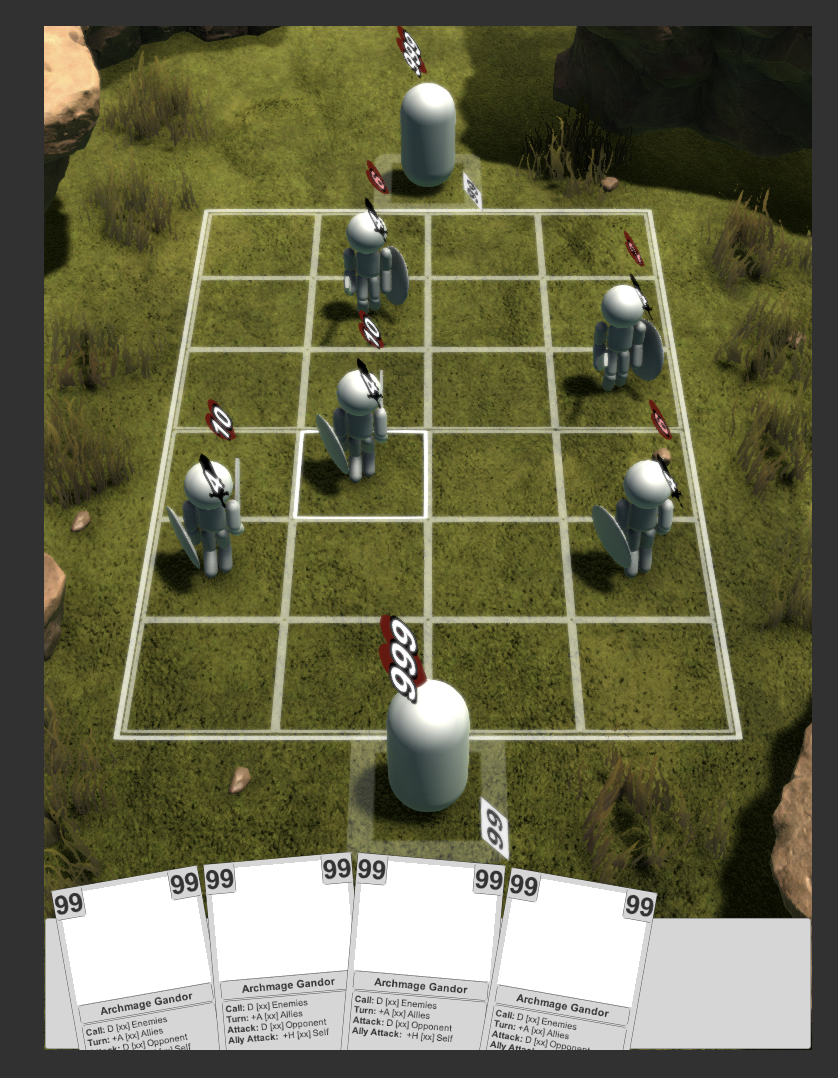

I didn’t grab any screenshots through the very first stages of ‘capsule wars’, in which I fussed with the general structure of figuring out how I wanted to control units moving back and forth, and smacking each other, but once I got the basic timing sequence down I went in and set up some placeholder characters and rudimentary animations.

So I got that working that way. Click a square it puts down a default 4:10 unit, it picks a target, walks across and smacks the opponent. Then I got mired down in the planning phase, and went in circles.

Ultimately I concluded that for my real goals I needed to back up, and come at this from a wholistic top down approach, and make the whole game state serializable. (Because that’s easy!) The goal here is to create a transferable state model for everything, in part so that an AI can run simulations to pick a move. MVC (Model, View, Controller) is mostly the pattern, but I’m introducing a D as well, which is data. Data objects will actually make up a lot of the functional parts of the game, non-volatile building blocks such as the selection filter:

The concept here is that the code will never need to instance the concept of this “Ranged Allies Around Source,” where as the object name itself will be descriptive where it is referenced in other components such as Triggers. Ranged is likely to be an automated Keyword on Units based on their attack type. I’m using strings since while the compares are less efficient they flexibility is much higher than Enums. This way an entirely new Keyword can be introduced to the game with no code or binary changes, and the code never references the theme of the world. Do you have arcane magic, or nature magic, or don’t? The code doesn’t have to care.

Filters are intended to work in two directions Event sourcing and Action targeting. So for instance lets say a card does damage to all enemies when it enters gameplay. In the trigger there will be a Appears event and a Filter (Main: Self, Area: n/a, Role: Source) then a Damage effect and a Filter (Main: Enemy, Area: All, Role: All). Conversely if that first filter were not set to Self but Enemy, and we re-used the filter both times every time an Enemy appeared it would take damage.

After all that I took a break and grabbed some Free Unity Assets just so I could look at something prettier whenever I switched over to Unity.

Right now I’m trying not to stress over art. There are a lot of options, though I’m leaning to full 3D rather than the 2D puppets common in the genere. The reason for that is the ability to do other nifty things.

|  |

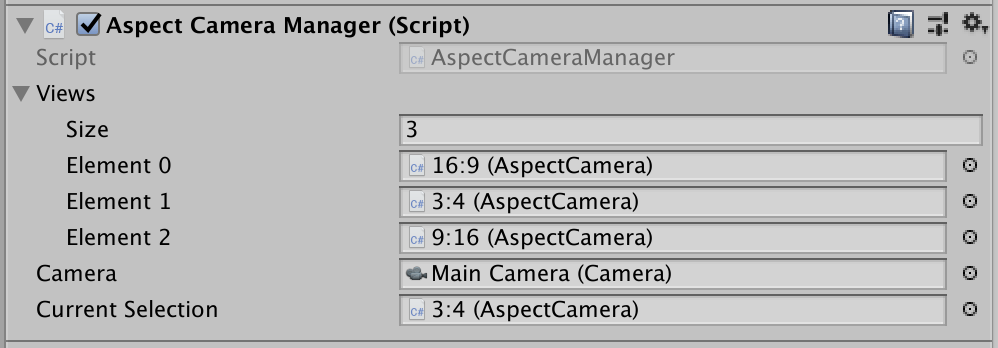

Obviously I’ll have to change my UnitHUDs to some kind of billboard, but that was the plan any way. The main trick here for selecting the different views is a simple function that compares the absolute value of Vector2.Angle between two Aspect Ratios and the screen, and picks the closer, then simply applies it’s properties.

public AspectSelection ReturnCloser (Vector2 target, AspectSelection other)

{

if (!other)

return this;

if (Mathf.Abs(Vector2.Angle(target,other.aspect)) < Mathf.Abs(Vector2.Angle(target,aspect)))

{

return other;

}

return this;

}The deeper trickery is writing a helper singleton that allows scripts to register for screen events. Though I plan to transition this singleton to a simple static class that attaches to an Update singleton that non-monobehaviors can access. The reason for all of this silliness is that Unity never got around to generating a screen event system of their own, and the only way to detect screen changes is to check manually on say Update.

Any way, thats enough for now. Back to re-writing the turn flow to a viewless stored structure, then reading the view out. Wish me luck.

Measuring the Problem

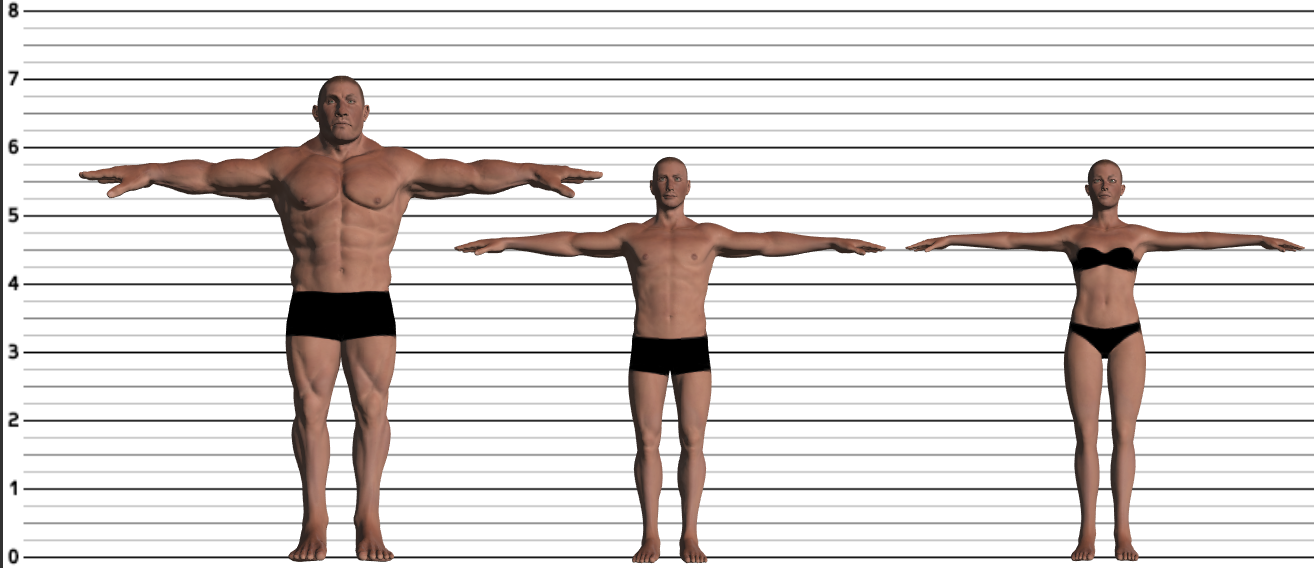

Not every problem that needs solving is necessarily all that technical. As a side line I’m an author, and since I am also an artist I noodle around with character art. Adobe Fuse which they picked up from Maximo is an appealing place to dig in, and really try to work out the visual appearance of a character. If however you want to get a line of characters that are all in appropriate intended heights to each other, or hit specific benchmarks the toolset is currently a bit limited.

However given a known (and I am taking an Adobe representative’s word on this) that the Brute is 7′ tall, one can work around the problem.

Step 1: Make a measurement image, to save you some time here’s mine:

- Import the image as an image plane in Fuse.

Step 2: Make or import a character with a known overall height.

- Align the character to the grid.

- Bookmark the camera at which the character lines up. (In Image Plane Settings.)

- You’ll need this bookmark when switching files to get to your measuring view.

Step 3: Adjust body parts to taste.

- If you want to get particular about arm length you can use a full grid.

- I have a request out to Adobe to get baseline figures for all models, but if you feel a character is proportionally correct, and you know how tall they are you can use any character as your baseline.

Here were my results assuming the Brute is 7′ and with the Adobe representative throwing out 5′-6′ for average model heights. Seems close at very least. Happy arting!

Zombie Cancer Atlases – Just Say No

It’s an inevitable temptation, that shows up somewhere in a UI pipeline, for the same asset to appear in more than one atlas. Resist this temptation for if there is a devil, it is indeed the devil whispering in your ear, and leading you down the slow path of damnation.

I mean to offend no one here, speaking in hyperbole – but the behavior of a replicated asset in more than one atlas is very analogous to a cancer. It is very easy in many tech pipelines for each instance of this asset to be used in different places. If one of these atlases becomes deprecated, you will suddenly find that countless unexpected things are relying on it.

Efforts to eradicate this missuses will constantly get undone during integrations, and other bug fixing, by mostly well meaning individuals, just trying to ship. On a high branch turnover project, with a long update cycle this can become infuriating for all parties involved. Each person touching the UI pipeline amplifies the potential for this cascade of failures, back slips, and perpetual invasive struggles to undo, something that should never have been done in the first place.

This advice goes double for any case where atlas restructuring is occurring. Under no circumstance should for even one build, it be allowed to slide that an old atlas with redundant entries be allowed to persist.

The source of this cautionary tale is a 2048 square monster HUD atlas, that also contained tutorial assets. Not too long after it’s inception it was realized that this atlas was a bad idea, and it was broken down into three smaller atlases for transparent, and opaque HUD, and a separate tutorial atlas. The original large atlas was not immediately removed during development, and hundreds of instances of tutorial elements referenced from this atlas crept their way through the game. Rendering the monster near impossible to slay.

So – be wary, less the Zombie-Cancer-Atlas come for you too.

VFX are UX

Ground Effect,

League of Legends

©Riot Games

A common miss conception is that VFX are secondary, simply a pretty part of a game, or even to an extent extraneous. Failing to grasp just how much VFX factor into usability, and the general user experience is a dangerous pitfall.

These are not simple fluff tacked on, and if they are, then the UX process has already failed. Good VFX must be communicative – they should feel awesome not simply because they are ‘pretty,’ or high tech, but convay crucial information that helps the user make decisions, learn from mistakes, and gauge the world in which they are interacting.

Just a few examples of things VFX should tell the player:

- The area of this effect is this large.

- The area of this effect is this shape.

- This did something significant.

- This is good.

- You have succeeded.

- You have succeeded profoundly.

- You are running out of time.

- You have failed.

- OH BLOODY OUCH NOT AGAIN!

Casting Indicator,

Secret World

©Funcom Gmbh

Many modern RPGs have begun to embrace using secondary ground indicators for area damage. A direction that I highly applaud, but this can not be allowed to excuse the VFX them selves from selling an event. This will leave the player feeling unrewarded, disconnected, and fail to reenforce what has happened. You can give the user a fancy modern take on a cast bar (the best implementation I’ve seen of this trend – see Secret World), but a cast bar however fancy does not excuse a lack of communication from the actual VFX.

It really is make or break for the emotional investment of the player to sell with VFX. If you pull off something complicated, and good, then a lack of distinct, visual, and preferably visceral feed back is inexcusable (see combos in Guild Wars 2.) Though every situation will vary the answer is not always to turn it up to 11 – some times important effects would be selling the impact of an event, but are simply getting lost because less important visuals are turned up too high.

Frozen Enemy, Diablo 3

©Blizzard Entertainment

Details matter, and are worth it. They may not be noticed every time they happen, but when they are you make your self, your product, and the company look pro. Take something as simple as icicles on a frozen enemy (see Diablo 3.) Traditionally it’s been written off as good enough to change the shader to apply a frosty look – but why not go that extra step. It’s not even that hard – you have joint positions, generate secondary effects relative to these – for bonus points do linear distribution between joint locations.

Hierarchy – Don’t Over Do It

Hierarchy is a powerful, necessary tool in UI – but when miss used it quickly becomes a destructive agent against all that is usable. There is nothing new here when it comes to the end user, reducing the drilling depth of our final product UI is a mainstay of the industry. Yet as all things high minded what we do for the final product, often get’s lost in the pipeline. Of course nothing bellow is a hard, and fast rule, but they are things to think about.

There are several possible categories of offense in the arena of out of control Hierarchy:

Redundant Naming:

- FX/explosions/fx_explosion_small1.fx

- FX/explosions/small1.fx

- UI/textures/buttons/ui_big_red_button1.png

- UI/textures/button_red_big1.png

- Note keep your categories first, descriptors last.

- Preferably don’t have pre-baked color like this, but that’s another topic.

The problem with redundant naming is it breeds inconsistency, and fights to maintain it, often against a development clock. That said if you need unique file names, and your target file system ignores extensions (a problem in Unity3D bundles for instance) then there are still alternatives to keep this in check.

- FX/explosions/grenade_impact_small1.fx

- Favor more meaningful names to differentiate.

- FX/explosions/grenade_impact_small1_tex.fx

- If a texture/material is going to be non generic then favor putting it with what uses it.

- If they can be generic, then favor a generic name/location reducing conflict.

- Save suffixes for subordinate parts.

- Prefer suffixes to prefixes because this keeps related items together in lists.

- Think of this as implied hierarchy.

- UI/textures/buttons/button_red_big1_tex.png

- Note that the ui_ previously affixed to this did not solve the problem if a material, or other related game file might also get this name.

Feature Set Naming:

- Texture_ALPHA_UNLIT_VCOL_NOFOG_NOCULL.shader

- Heaven forbid this gets mapped to:

- Texture/ALPHA/UNLIT/VCOL/NOFOG/NOCULL.shader

Yes I’ve seen this done as an actual standard – the resulting drill down was unusable, and the naming intimidating or even meaningless to many artists. This was done with the entierly noble goal of making it easy to identify shaders that had already been written – but on occasion these existent shaders were discovered only when applying the final naming convention to a duplicate.

It would be far more productive to maintain a catalog of shaders tagged by feature set – and then name shaders according to use, and deviation from expected settings within that use. The above example shader is a simple 3D UI shader – all the overrides from default are standard to such UI shaders. As such:

- UI_Alpha.shader

- UI/Alpha

- UI/Opaque

- UI/Opaque_Diffuse

- Note this lists Diffuse as it varies from standard.

- It probably also Back Face Culls – but do we really care in the naming?

What about the redundant shaders you may ask? While for some points of efficiency sharing shaders that happen to share the same feature set is good, this does create unintended development hiccups if you lose track of what shares shaders, and absently change a shader to solve a problem in one context (particle systems for instance) only to find out it was also used in another context (ui for instance.) Further just because certain classes of shaders are similar, does not mean they are identical:

- Texture, Unlit, Alpha, Vertex Color, No Back-face Culling

- UI Shaders tend to need to ignore fog

- Particle Shaders tend to need to accept fog

- Texture, Specular, Opaque, Normal

- Environment shaders tend to need light maps

- Actor shaders tend not to need light maps

Over Categorization, And Distribution

- Levels/Environments/Swamp/FX/Environment/

This is a tricky topic. For instance:

- Sometimes you need to bundle effects for separate download.

Sometimes there are just so many effects that the extra categories help.

Keeping all the parts of your bundle together is tempting. But some systems this actually leads to inefficiency. Say you make your first Swamp Level, put it out there for DLC. All is fine, and good – but now you need to add a new swamp level. Depending on the system any or all of the following could now be a problem:

- The client has to load/unpack the entirety of the first Swamp bundle to run Swamp2.

- In addition to unpacking Swamp2’s full resource set.

- The client has to re-download an updated bundle to add the new Swamp2.

- Including all the assets for Swamp1.

- You now have disassociation.

- Some effects for Swamp 2 live in one nested directory, others in another.

- You duplicate resources across Swamp1 and Swamp2.

Lets propose an alternative:

- FX/Environments/Swamp/

- FX/Enviornments/Swamp2/

- This being a caveat only if you can’t dynamically merge the bundles

Having multiple bundles for the same level load is not a bad thing. Particularly in some systems which have to load the whole bundle into memory, to unpack it. It has a side benefit that you can more incrementally indicate your load process, either verbosely for debug, or more discretely with your loading bar for the final client. This can be applied not only FX, but textures/materials, sounds, etc. This does create it’s own form of disassociation, but it is less impactful to the artists – for code it largely changes the order of hierarchy – or you could internally re-categorize the assets in the code/load process, which is probably better than dealing directly with directory hierarchies.

Always Expect Order

As a closing piece of advice, something that runs vaguely counter to the rest. Be very cautious expecting things to be unique. While not critical, it does keep your files cleaner, and easier to use.

- Textures/fx/cloud_tiling.png can every easy wind up with:

- cloud_tiling_2.png

- cloud_tiling_3.png.

- When you make that first asset with a given name, go ahead and give it a 1

- Or if you are so inclined a 0.

- If you know there will be a whole lot start with 00, or 01.

- When possible prefer a descriptor to a number:

- grenade_impact_small.fx

- grenade_impact_large.fx

As I noted early on – prefer to put categories first, adjectives second.

- button_blue_small.png not small_blue_button.png

- explosion_dirt_large.fx not large_dirt_explosion.fx

- If an effect can be reused there is always a high probability it will be.

- grenade_impact_small.fx is acceptable if you know for sure it won’t be reused

- But why assume?

- That explosion may be reusable.

- Or it may get replaced for it’s original purpose – and reused elsewhere.

- Sure you can rename when this happens, but it’s something to think about.

Death Dome

A few shots from Death Dome. ~99% of the effects in this game – along with the back end tech that drives the text motion graphics – were made by me. For a better view the game is available for free Download on: iOS Android

- Perfect Parry & Use Flux

- Final hit from flux mode.

- Dynamic Text Effect

- Phoenix Rises Again

Text Demo

All text presented in the following video is displayed live in Unity without preexisting text systems. The justification alignment, word wrapping, line spacing, mesh generation, shader, and motion graphic effects were written by me. This is a technical demonstration using Arial, and the font texture and data were exported with BMFont.

Far more impressive in the original 180k Unity Web Player form.

The motion graphics part of the system was used for:

The text formatting engine has been folded into the main line for future Glu Mobile projects at their Kirkland studio.